当前位置: Language Tips> 双语新闻

Robots are learning to DISOBEY humans: Humanoid machine says no to instructions if it thinks it might be hurt

If Hollywood ever had a lesson for scientists it is what happens if machines start to rebel against their human creators.

如果说好莱坞曾经给科学家们一个教训,那一定是机器人开始反抗它们的创造者——人类。

Yet despite this, roboticists have started to teach their own creations to say no to human orders.

尽管这样,机器人学家已经开始教机器人拒绝人类的命令了。

They have programmed a pair of diminutive humanoid robots called Shafer and Dempster to disobey instructions from humans if it puts their own safety at risk.

他们已经编程了一对小型的类人机器人,名字叫谢弗和邓普斯特,如果人类的指令对它们自己有危险,它们就会违背命令。

The results are more like the apologetic robot rebel Sonny from the film I, Robot, starring Will Smith, than the homicidal machines of Terminator, but they demonstrate an important principal.

相比那些会杀人的终结者机器人,这个试验的结果更像是威尔·史密斯主演的《我与机器人》中的那个会道歉的机器人索尼,但是它们都遵守重要的原则。

Engineers Gordon Briggs and Dr Matthais Scheutz from Tufts University in Massachusetts, are trying to create robots that can interact in a more human way.

马塞诸塞州塔夫茨大学的工程师戈登·布里格斯和马特赫兹博士正试图创造出更能以人性化方式交流的机器人。

In a paper presented to the Association for the Advancement of Artificial Intelligence, the pair said: 'Humans reject directives for a wide range of reasons: from inability all the way to moral qualms.

在人工智能发展协会上发表的一篇论文中,两人说:“人类会因为各种各样的原因拒绝执行指令:从没有能力做到对道德的顾虑。”

'Given the reality of the limitations of autonomous systems, most directive rejection mechanisms have only needed to make use of the former class of excuse - lack of knowledge or lack of ability.

“鉴于自主系统的局限性,大多数指令排斥反应机制只需利用以前的理由——缺少知识或者能力。”

'However, as the abilities of autonomous agents continue to be developed, there is a growing community interested in machine ethics, or the field of enabling autonomous agents to reason ethically about their own actions.'

“然而,随着自动自主主体的能力不断提升,将会有越来越多人对计算机伦理学或者自主主体行为的道德性领域感兴趣。”

The robots they have created follow verbal instructions such as 'stand up' and 'sit down' from a human operator.

两人创造的机器人能够执行人的口头指令如“起立”和“请坐”。

However, when they are asked to walk into an obstacle or off the end of a table, for example, the robots politely decline to do so.

然而,如果命令他们走入障碍物或者走向桌子的边缘时,机器人会礼貌地拒绝。

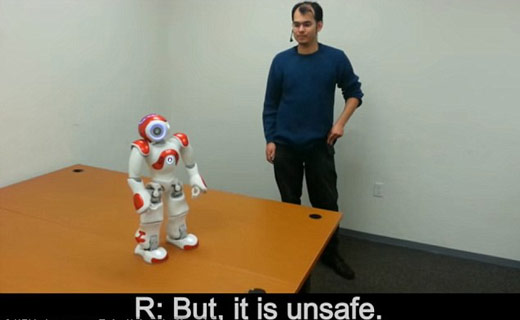

When asked to walk forward on a table, the robots refuse to budge, telling their creator: 'Sorry, I cannot do this as there is no support ahead.'

当要求机器人在桌子上向前走时,机器人会僵持,并告诉命令发出者:“对不起,前方没有路,我不能这么做。”

Upon a second command to walk forward, the robot replies: 'But, it is unsafe.'

再一次要求机器人时,它会说:“但这不安全。”

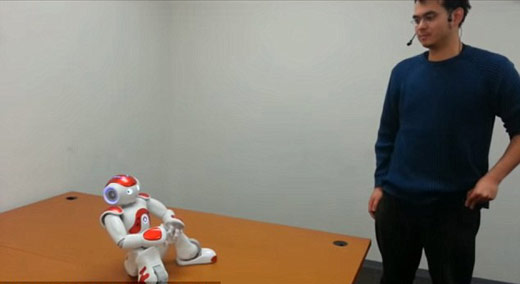

Perhaps rather touchingly, when the human then tells the robot that they will catch it if it reaches the end of the table, the robot trustingly agrees and walks forward.

当人告诉机器人在它们走在桌子边缘时,人们会接住机器人,那么机器人会非常信任人类然后继续向前走,这还相当令人感动。

Similarly when it is told an obstacle in front of them is not solid, the robot obligingly walks through it.

同样的,人们告诉机器人前方的障碍不是固体的时候,机器人也会无所畏惧地向前走。

To achieve this the researchers introduced reasoning mechanisms into the robots' software, allowing them to assess their environment and examine whether a command might compromise their safety.

为了实现这一效果,研究人员在机器人的软件程序中引入了推理机制,能让机器人评估环境并且判断这一指令是否会危及它们的安全。

However, their work appears to breach the laws of robotics drawn up by science fiction author Isaac Asimov, which state that a robot must obey the orders given to it by human beings.

然而,他们两人的研究似乎违反了科幻作家艾萨克·阿斯莫夫制定的机器人法则,该法则规定机器人必须服从人类的命令。

Many artificial intelligence experts believe it is important to ensure robots adhere to these rules - which also require robots to never harm a human being and for them to protect their own existence only where it does not conflict with the other two laws.

许多人工智能专家认为,确保机器人遵守这些法则是十分重要的——这些法则还包括机器人永远不能伤害人类,并在不和其他法则相冲突的前提下保护自己。

The work may trigger fears that if artificial intelligence is given the capacity to disobey humans, then it could have disastrous results.

这项工作可能会引发担忧:如果人工智能使机器人能够违背人类的命令,那么它可能带来灾难性的后果。

Many leading figures, including Professor Stephen Hawking and Elon Musk, have warned that artificial intelligence could spiral out of our control.

许多领袖人物,包括霍金教授和马斯克都已警告过人工智能可能会失控。

Others have warned that robots could ultimately end up replacing many workers in their jobs while there are some who fear it could lead to the machines taking over.

另一些人警告说,机器人可能最终会取代许多工人的工作,有些人担心机器人将接管一切。

In the film I, Robot, artificial intelligence allows a robot called Sonny to overcome his programming and disobey the instructions of humans.

在电影《我与机器人》中,人工智能让机器人索尼突破了编程,违抗了人类的命令。

However, Dr Scheutz and Mr Briggs added: 'There still exists much more work to be done in order to make these reasoning and dialogue mechanisms much more powerful and generalised.'

而戈登·布里格斯和马特赫兹补充道:“为了能让这些推理和对话机制更加强大和全面化,我们还有很多工作要做。”

Vocabulary

diminutive: 小型的

qualm: 疑虑;不安

budge: 挪动

英文来源:每日邮报

译者:张卉

审校&编辑:丹妮

上一篇 : 吴彦祖主演的功夫美剧来了

下一篇 : 想和领导搞好关系?别坐太近

关注和订阅

电话:8610-84883645

传真:8610-84883500

Email: languagetips@chinadaily.com.cn